Creating an Audio Based Indexing System in Yebr

This Document is currently in early beta Part of the series on Yebr’s experimental AI

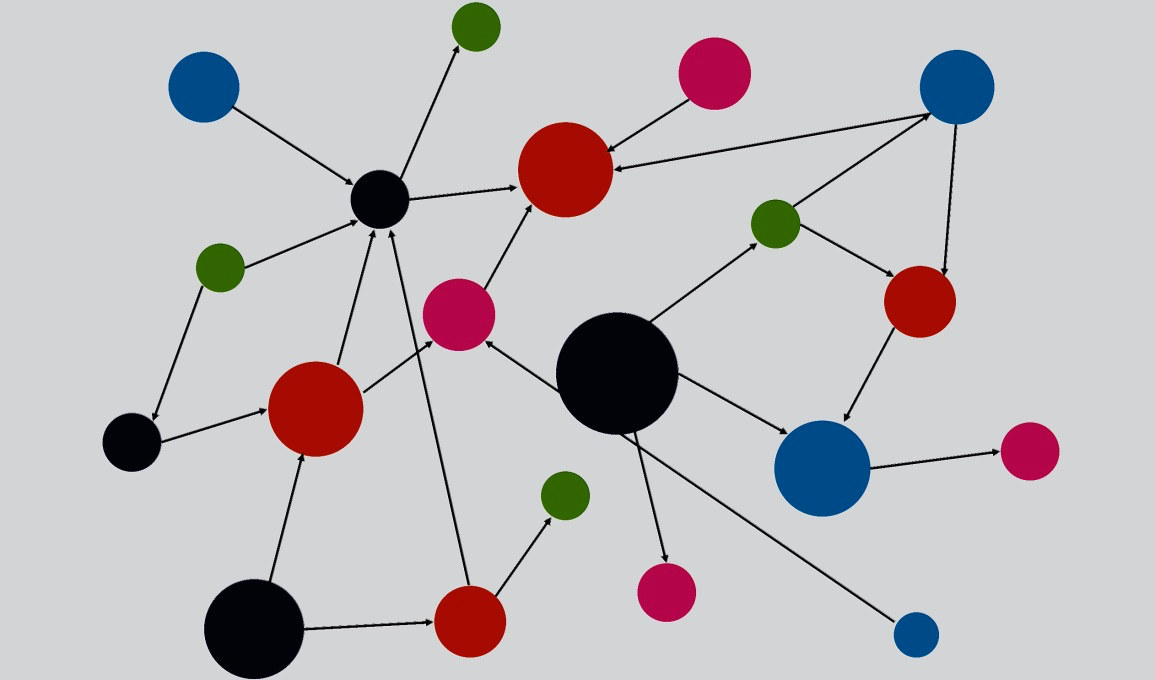

A complex mix of audio and AI that will help Yebr recognise tracks found anywhere on the web. Our system is driven by spiders that find content, and a recognition engine that creates a database graph.

Introduction

Yebr mainly sources its data from YouTube. To make music discovery and content recommendations on Yebr, we added a legacy indexing system which relied on YouTube Video ID mapping, which holds song metadata, e.g. titles, channels, etc. The system worked by upstream addition of unique video IDs inside a SQL-like database. This created a subset of the actual YouTube index for videos that users on Yebr had streamed. The system helped give the base for other Yebr functions such as recommendations, which rely on this sub-index as a corpus.

However, we soon realised that this approach comes with its drawbacks. For starters, this approach limits users on Yebr from getting newer content recommended to them, as songs that are inside this corpus are much more likely to be cycled and recommended. Without user activity, this system would never get updated, and new users will always be presented with outdated songs, which would drive engagement even lower.

How It Works

We needed a system that is independent and continually indexes newer songs. We also realised that the system being only dependent on a single platform will result in server overloading, so we wanted this system to depend on multiple audio platforms, where new platform bindings can easily be added.

To achieve this, we relied on a system which consists of two sub-services: a recognition engine and a web crawler. Ideally, the system would crawl audio files, fingerprint them and index them into a large dataset which could then be used for recommendations and analysis. However, the content on these platforms is not 100% music; it consists of diverse audios, and Yebr had to be smart enough to identify these and process them individually. For this we added multiple layers of filtering, based on schematics and AI models, which would identify and classify these audios.

Infographic: https://research.kabeersnetwork.tk/blog/audio/yebr/creating-an-audio-based-indexing-system-in-yebr/infographic.html

Recognition Engine

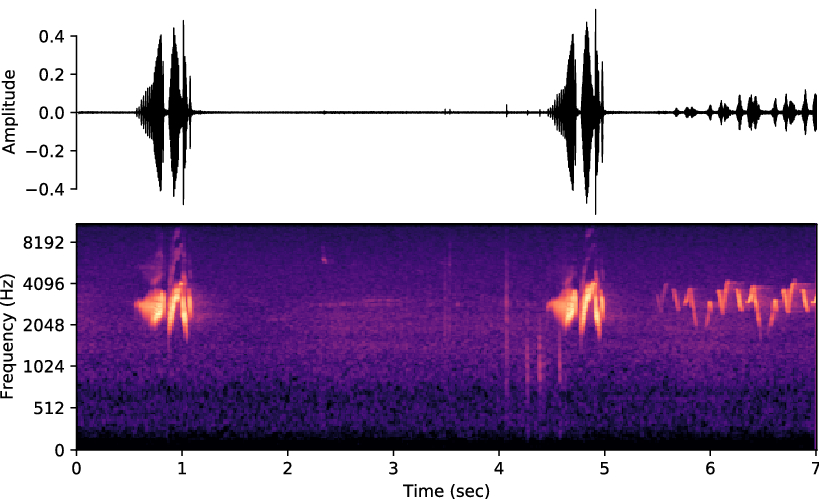

We looked for multiple options for an AI recognition engine. We wanted something like Shazam’s fingerprinting algorithm, which will allow us to convert audio clips into a hash-like format. The recognition engine relies on a MySQL database to store these hashes.

Initially the recognition engine would fingerprint and store all of the content indexed by the crawler. However, we soon realised that the content library on platforms like YouTube is very diverse, containing not only music, but also podcasts and regular videos. These would be indistinguishable to our system which would fingerprint and index these. We needed some way to sub-classify these audios into predefined classes, to allow class-based processing of different audios. To do this we relied on YouTube’s 8 Million dataset, which contained hundreds of predefined classes. This tagged content helped us to avoid resource intensive computation of audios like podcasts, which will only be a fraction of Yebr’s index, and would not cause system overloading even if they’re only available on a single source. Not only that, fingerprinting podcasts is inherently harder because of their inconsistent highs and lows (referring to a spectrogram).

Crawler

Our crawler consisted of a shell-based architecture, where a base API is provided, and bindings for other platforms can be added as code plugins. The crawler uses Apache Cassandra to store and retrieve crawl lists and queues, and a cron trigger is used to queue crawls to the recognition service, where it is added to the permanent Yebr song database. Each crawler plugin is supposed to find its own lists, and is responsible for its structured item response, i.e. the song or video.

Conclusion

This audio-based indexing system represents a significant advancement in how Yebr approaches content discovery and recommendation. By combining web crawling with advanced audio fingerprinting technology, we’ve created a system that can identify and catalog music content across multiple platforms. The use of AI-based classification ensures that resources are allocated efficiently, focusing on music content while filtering out non-music audio like podcasts and videos.

The system’s modular architecture allows for easy expansion to new platforms while maintaining performance and accuracy. By utilizing YouTube’s 8 Million dataset for classification, we ensure that our indexing process remains focused and resource-efficient. This infrastructure forms the backbone of Yebr’s modern content discovery experience, allowing users to find music regardless of where it’s hosted, while continuously expanding our catalog through automated indexing.

Looking forward, this system enables new features such as cross-platform content matching, enhanced recommendation algorithms, and improved music discovery capabilities that weren’t possible with our previous YouTube-only approach.